Consciousness

by Andrew Boyd

Today, are you conscious? The University of Houston presents this series about the machines that make our civilization run, and the people whose ingenuity created them.

Consciousness is so familiar we rarely give it a second thought. Yet it remains a great puzzle to philosophers.

As far as we know, consciousness arises from the working of the brain. The brain is a physical object made up of material elements, just like rocks, celery, and my toaster oven. How do unconscious bits and pieces give rise to consciousness?

While you and I may not fully understand why, we can still agree that consciousness exists, and even marvel that it does. However, as Berkeley philosopher John Searle argues, modern philosophical inquiry suffers from a fundamental "terror of consciousness." Consciousness doesn't easily fit into prevailing theories of the mind, and the essence of Searle's writing is that when it comes to the mind much of modern philosophy is sorely off-track.

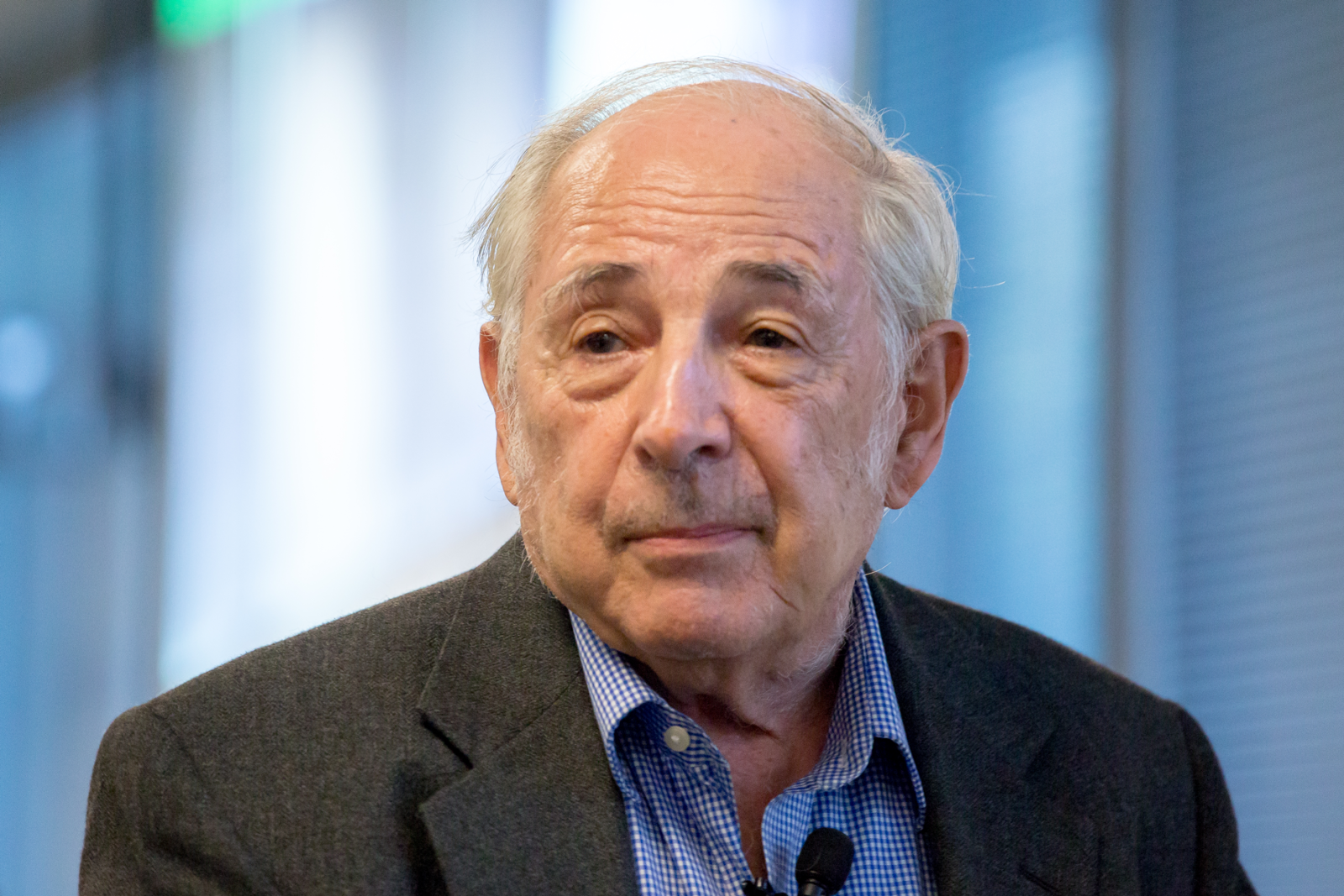

John Searle giving his talk "Consciousness in Artificial Intelligence" at Google in Mountain View. Photo Credit: Wikimedia Commons

Searle speaks frankly. Challenging those who deny the very existence of consciousness, he wonders how to argue with them. "Should I pinch them to remind them they are conscious?" remarks Searle. "Should I pinch myself and report the results in the Journal of Philosophy?"

Searle's most famous argument criticizes a theory known as strong artificial intelligence. In this view of the world, when a computer behaves like a human, it also experiences thoughts, feelings, and understanding -- the traits we associate with consciousness. When we weep for the mechanical boy in Steven Spielberg's AI, or feel compassion for the haunting Ava in the film Ex Machina, and do so because we believe they're conscious, we're accepting the theory of strong artificial intelligence.

Artificial Intelligence. Photo Credit: Pixabay

Advocates of the theory are keenly influenced by modern computers. Brains are like computers, they reason, and minds are like computer programs; so a mind can exist on any suitable computer, whether it's made of gray matter, silicon, or a 'suitably arranged collection of beer cans.' Consciousness isn't anything special, protagonists would argue, it's just something that happens when you run the right computer program.

Arguing against strong artificial intelligence, Searle imagines himself locked in a room with instruction manuals that allow him to reply to any question or comment posed in Chinese. Searle doesn't understand Chinese, and the manuals don't provide English translations. They simply provide rules that allow him to meaningfully respond to any string of Chinese characters.

Anyone passing messages under the door would conclude that whoever's in the room understands Chinese. But clearly, the occupant Searle doesn't comprehend the meaning of anything. In the same way, Searle argues, there's no reason to assume a computer that behaves like a human must also feel, think, and understand like a human.

Searle is one voice among many, and his viewpoints aren't universally shared by his peers. But he reminds us that consciousness shouldn't be casually dismissed. It's a special quality, and a quality we human beings are fortunate to enjoy. We are, in fact, most remarkable engines.

I'm Andy Boyd at the University of Houston, where we're interested in the way inventive minds work.

(Theme music)

This episode is an updated version of episode 1912.

Much of the material in this essay was taken from: John R. Searle, The Rediscovery of the Mind, MIT Press, Cambridge, Massachusetts, 1992. See in especially pages 8, 9, 30, 32, 44, 45, and 55.

Searle brings together many of his ideas in the wonderfully lucid book for general readership: John R. Searle, Mind, Language, and Society: Philosophy in the Real World, Basic Books, New York, 1999.

This episode was first aired on January 21, 2016