The Mathematics of Language

Today, let's see what mathematics can tell us about language. The University of Houston Mathematics Department presents this program about the machines that make our civilization run, and the people whose ingenuity created them.

In the novel The Count of Monte Cristo, one of the most memorable characters is Monsieur Noirtier de Villefort. He is completely paralyzed and mute, and yet is able to communicate by blinking his eyes. The system he uses is slow and laborious, and involves running through columns and columns of words in a dictionary. When I first read the novel, I wandered how this could be done more efficiently? Could people capable of only minimal movement communicate with the same speed as you and me? To achieve this, we need a good understanding of the structure of language.

But how can we describe the structure of something so vast and complex as language itself? After all, our languages are sufficiently flexible to capture nearly all of our thoughts and feelings. However, they do obey very precise mathematical rules.

For instance, in the 1930s the American linguist George Kingsley Zipf observed that the length of a word is precisely related to the frequentcy of its use. We use long words infrequently — our sentences are formed mostly by words containing only a few letters. Zipf argued that this is part of a larger "Principle of Least Effort".

If we wish to talk with a paralyzed communicant we should use a list of words that arranged according to the frequency of their use. Such a list would start with short words and contain words of increasing length. Using a regular dictionary is terribly inefficient.

But to do better, we need a deeper understanding of language structure. Words in a sentence are not independent of one another. Likewise, letters do not follow one another at random. For instance, "E" is the most frequent letter in the English language. However, if you see the letter "T" it is more likely to be followed by the letter "H" than by "E" — despite the higher overall frequency of the letter "E". But if we see the letter "T" followed by "H," most of us will guess that the next letter will be "E" — the English language is full of words that start with the arrangement "T. H. E....".

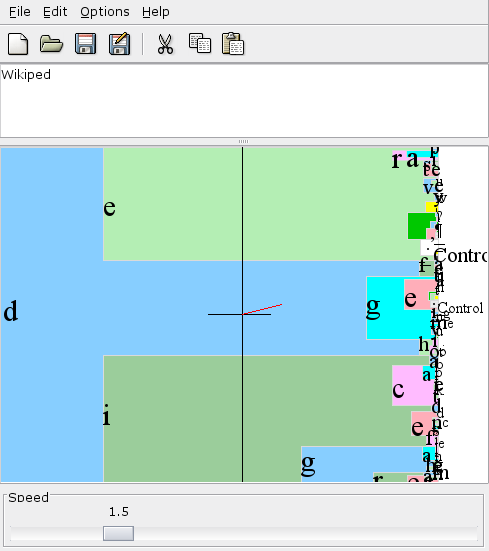

And this structure can be used to minimize the effort of writing: For instance the program Dasher allows you to enter text without using your hands. You can direct a pointer to the first letter in a word using an eye-tracking device. After you've entered the first letter, the program anticipates the most likely letters to follow and displays them more prominently than the others. By shifting your gaze you can then point to the next letter in the word. As letters zoom by on the screen the program seems to anticipate what you mean to say, prominently displaying the most likely sequences of letters. After some practice, you can enter 25 or more words per minute.

Writing, speech and literature seem to be far removed from mathematics. However, mathematics is much more than the study of numbers. It is the study of structures and forms wherever they may appear. And is there anything that humankind has created that has a structure richer and more complex than our language?

This is Krešimir Josić at the University of Houston where we are interested in the way inventive minds work.

Jean-Dominique Bauby suffered a massive stroke that left him completely paralyzed (locked in). He dedicated his memoir, The Diving Bell and the Butterfly, by blinking his left eye.

A recent article in the Proceedings of the National Academy of Science of the USA addresses this issue and provides a number of references http://www.pnas.org/content/early/2011/02/22/1100760108.short?rss=1.

The father of information theory, Claude Shannon, already proposed a Markov model of language.

A readable, but somewhat technical discussion of different probability distribution, including the distribution that governs then frequency of words, is given here http://arxiv.org/pdf/cond-mat/0412004.

Modern speech recognition software uses Hidden Markov Models, among many other techniques http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=18626. This paper can be found freely online.

You can try Dasher for yourself http://www.inference.phy.cam.ac.uk/dasher/, or see a demonstration here https://www.youtube.com/watch?v=0d6yIquOKQ0.

Here is a very nice review of the history of speech recognition http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.90.5614&rep=rep1&type=pdf. I was surprised to find that already in 1952 algorithms were developed to recognize spoken digits.

All images are from Wikipedia.