More Than One Computer

Today, when is one computer not enough? The University of Houston's College of Engineering presents this series about the machines that make our civilization run, and the people whose ingenuity created them.

Most of us buy computers for what we do with them -- store pictures, send email, surf the Web. But it's impossible to buy a computer without some idea of how fast it is and how many files we can store on it. We inevitably face decisions about megahertz and gigabytes. Now we have to learn a new term -- multiprocessor or multicore. It represents a huge change in computer technology.

Consider the problem of washing two cars. If I wash them myself, it might take two hours -- one hour for each car. If I get someone to wash one while I wash the other, I can be done in an hour. The total work doesn't change, but the time is cut in half. Multiprocessor computers function the same way.

Using multiple processors seems so simple, we might wonder why it wasn't thought of before. In fact, it was. It's been around almost as long as computers. Large companies like IBM and Intel have spent huge sums of money on their design. So have universities and funding agencies. So why are we just now starting to see them?

One reason is shared resources. If I and my car-washing friend are constantly waiting on each other for the hose, it slows us down. In the case of computers, if things aren't handled properly, resource competition can cause the entire system to lock up.

Multiprocessor computers are also more complicated to program than their single processor counterparts. Programmers have to write new programming languages to tell computers what can and can't be done in parallel. Debugging becomes a lot harder. When only one processor's involved, programs work in a single, repeatable sequence of steps. That's not the case when work is farmed out to multiple processors. And, even if work truly can be done in parallel, it can be hard to find and fix any programming mistakes.

Take, for example, the tasks: "open the door," and "walk through the doorway." If we ask a single person to do both in the correct order -- no problem. But when two different people do the tasks, they have to do them in the right order. Reverse the order, try to walk through the door before it's open, and we have a big problem. Programs designed for multiprocessor computers face exactly this situation.

Programming is sufficiently complicated that multiprocessing never really caught on for home computers. Instead, chip-makers sped processing by simply pushing instructions through a single processor faster. That satisfied the market for many years.

But there was a limit to how far this strategy could be taken. The laws of physics tell us that the faster we push instructions through a processor, the more heat we produce. Chip manufacturers reached a point where their processors simply burned themselves up. And multiprocessing had to be taken seriously.

Duo-core processors have now established themselves in computer stores. Quad-core processors are making their presence felt. And this is just the beginning. Only with time will we see how far multicore design will take us. With the new computing power they promise, who knows what marvelous new advances we can expect to see in the coming years.

I'm Andy Boyd, at the University of Houston, where we're interested in the way inventive minds work.

Faster Chips are Leaving Programmers in Their Dust. The New York Times, December 17, 2007, pg. C1.

See also: Multi-core (computing). (2007). Retrieved January 6, 2008 from Wikipedia.

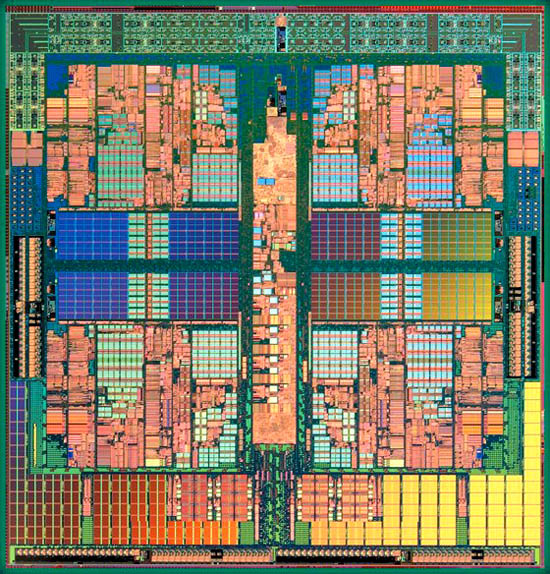

Quad-Core AMD OpteronTM processor, showing its four distinct cores